Tools: Collaboration with Transkribus

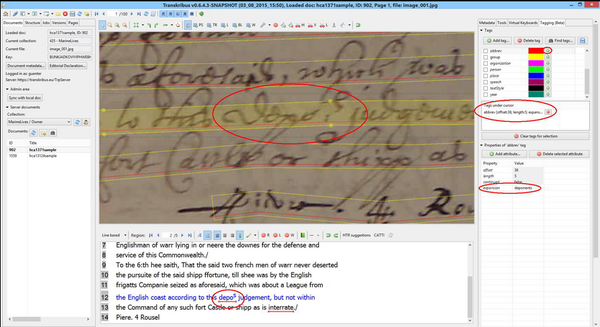

MarineLives is exploring the use of Transkribus tools to scan signatures and markes at the bottom of High Court of Admiralty depositions as input to a proposed April 2018 Data Study Group with the Alan Turing Institute.

We are also structuring a project with the READ/Transkribus project team to explore key word spotting technology applied to English language legal records from the early and mid-C17th. The project is planned to start in April 2018 and to run through to October 2018.

Signature/Marke recognition

Goals

- Structure a challenge for an Alan Turing Institute led Data Study Group to explore educational, occupational and other segmentation in MarineLives data concerning early C17th High Court of Admiralty deponents, without a priori assumptions as to possible groupings.

- Provide a MarineLives data set to the Alan Turing Institute to support the challenge to the proposed Data Study Group, April 16th-20th, 2018. The data set would include digital files of signatures and markes affixed to the depositions by deponents, which will have been pre-processed using Transkribus' Handwriting Text Recognition engine, together with data categorising the deponent, who affixed the signature or marke, in terms of age, residence, occupation and age, together with the date of the deposition.

- Data Study Groups take place three times a year and are a week long. Paticipating researchers are drawn from the Turing Institute's five founding universities (University of Cambridge, University of Edinburgh, University of Oxford, University College London, the University of Warwick), and the wider academic community. Director of the Data Study Groups is Turing Fellow and Associate Professor at Warwick, Dr Sebastian Vollmer. Past Data Study Group partners have been drawn from industry, and include Codecheck, Dtsl and Inmarsat. The April 2018 DSG is unusual in that the theme is 'Data Science for Social Good', with Accenture covering the costs of participants.

Characterisation of data

- MarineLives has a sizeable semi-structured data set of deponents, who made witness statements in the English High Court of Admiralty in the first half of the seventeenth century. These data include information on name, place of residence, occupation and age, as well as the date of the deposition. The statements are in written hand, recorded in bound manuscript volumes, held at the National Archives, Kew. They have been digitally imaged and are available in the MarineLives semantic media wiki. Depositions are acknowledged with the signature or marke of the deponent at the end of the deposition, which would have been affixed after the reading aloud of the written deposition to the deponent.

SMW platform and API

The MarineLives wiki is built on a PHP-based stack:

- Media Wiki

- Semantic MediaWiki extension to allow storage and querying of data across pages

- Semantic Forms extension to allow editing of pages as structured data

- Custom extensions for folio navigation, basic transcription, and improved behaviour to match transcription expectations.

For more information click here.

Scoping the data

SCOPING METHOD: WHERE IMAGES HAVE ALREADY BEEN TRANSCRIBED OR PARTIALLY TRANSCRIBED: We record all signatures and markes, once we have transcribed a deposition or personal answer with a rendition of the signature or marke, together with the observation [SIGNATURE] or [MARKE]. Our search engine has limitations, but use of inverted commas helps exclude extraneous data. We think that pages which contain multiple depositions, and thus multiple signatures and markes, will only show once in a signature or marke related search (since the search delivers wiki pages and one wiki page may contain up to 2 or even 3 depositions). Our scoping method will therefore underestimate the number of signatures and markes we have in the wiki. Most depositions are, however, longer than one page. Some statistical sampling would allow a better estimation of this.

Specifically we locate the signature or marke on the page with [SIGNATURE, RH SIDE], [SIGNATURE, LH SIDE], [MARKE, RH SIDE]

Some signatures are of interpreters, where the deponent is non-English speaking (and also not Dutch speaking). The notaries, who took down the depositions were English and Dutch speaking, but typically not Spanish, Italian, German, or Swedish speaking. Some appear to have spoken French, but not all. There is, we think, some contamination of data from deponents called "Marke".

Probably one third of the deponents are not resident in England (or Scotland, or Wales or Ireland). These deponents will have learned handwriting in non-English contexts, so comparing AI analysis of their signatures/markes with those of signatures/markes learned in an English language context would itself be interesting.

Simple searches of our wiki reveals the following data

"[SIGNATURE]" = 3315 Click for search details

"[MARKE]" = 1275 Click for search details

"[SIGNATURE, RH SIDE]" = 2582 Click for search details

"[SIGNATURE, LH SIDE]" = 129 Click for search details

"[MARKE, RH SIDE]" = 424 Click for search details

"[MARKE, LH SIDE]" = 7 Click for search details

A significant number of wiki pages exist, where an image has been posted, but there is not yet a transcription. We need to figure out how to search the wiki to estimate the number of such "blank" pages, but a wild guess is that we have 2,000-3,000 pages of images without any transcribed data. This would suggest something in the order of a further 1000-1500 signatures and markes are available as images, but without transcription of the location, age, occupation or name information, or even knowledge as to which wiki page contains the signature or marke.

AI/handwriting recognition could be used to identify these pages - both the signatures/markes themselves, and the raw name, location, age, occupation and name data. Devising and optimising AI to do the above, would itself be a BIG benefit to archives/libraries/MarineLives, since it would enable (with suitable semi-automated processes) to create metadata on a large scale on digital imaging projects, where no prior metadata exists.

Sample data

Methodology

All depositions, whether signed or marked, contain name, place, occupation and age information at the start of the deposition (and always in this order), both on the manuscript images and in the transcriptions.

So there are two possibilities - use AI/Natural Language Programming to identify the section of a wiki page (transcription or image), which contains the location, age, occupation and name information, and extract this automatically into a database. Or manually, in advance of the Data Study Group extract these data.

For a subset of the transcribed depositions, we have created semantic biographies. Note that this is NOT a random selection, but focuses on certain occupations (e.g. mariners, merchants, coopers, lightermen, watermen...). These biographies contain structured, linked data, which are extractable. Specifically they include the name, place, occupation and age data for the deponent, together with the spelling of the name of the deponent as transcribed by the notary and separately the spelling of the name of the deponent as signed by the deponent himself (or occasionally herself), together with a "Has literacy" code (Signature or Marke).

Access Semantic biographies

Access Semantic occupations

Semantic biographies appended with marke = 174

Semantic biographies appended with signature = 469

Proposed ingestion of signature/marke data using Transkribus

Proposed team

Rearchers: Researchers affiliated to the Alan Turing Institute

Partner organisation: MarineLives

Opportunities to get involved

- Considering the formation of a small volunteer based academic panel to advise on the palaeographical characteristics of signatures and markes

Bibliography

[ADD DATA]

Key word spotting

Goals

- Build and test an interface for historical researchers to interact with Transkribus key word spotting technology.

- Searching for words in the image, not the text rendered transcription

Methodology

[ADD DATA]

Proposed team

Colin Greenstreet (MarineLives)

Michael Bennett (Sheffield)

Transkribus staff

Potential panel of historical researchers

Opportunities to get involved

Potential panel of historical researchers

Bibliography: Transkribus use cases; signature recognition technology; key word spotting technology

Blog articles

Alex Hailey (Curator, Modern Archives and Manuscripts), 'Using Transkribus for handwritten text recognition with the India Office Records', British Library, Digital scholarship blog, Jan. 23rd, 2018[1]

Nora McGregor (Digital Curator, British Library), '8th Century Arabic science meets today's computer science, Or, Announcing a Competition for the Automatic Transcription of Historical Arabic Scientific Manuscripts', British Library Digital Scholarship blog, Feb. 8th, 2018[2]

Interactive Keywords Spotting Tool, showcased at European Researchers' Night, READ blog entry, Oct. 23, 2017[3]

Github

Transkribus/VCG-DUTH-Word_Spotting_By_Example, Github, 2018[4]

Journal articles

K. Zagoris, I. Pratikakis and B. Gatos, "Segmentation-Based Historical Hand-written Word Spotting Using Document-Specific Local Features," 2014 14th International Conference on Frontiers in Handwriting Recognition, Heraklion, 2014, pp. 9-14.

K. Zagoris, I. Pratikakis, and B. Gatos, “A framework for efficient transcription of historical documents using keyword spotting,” in Historical Document Imaging and Processing (HIP’15), 3rd International Workshop on, August 2015, pp. 9–14.

K. Zagoris, I. Pratikakis, B. Gatos. 2017 Unsupervised Word Spotting in Historical Handwritten Document Images using Document-oriented Local Features. Transactions on Image Processing. Under Review.

Multimedia

Günter Mühlberger, 01 Presentation: Transkribus - the status quo and future plans, Transkribus User Conference, Nov. 2-3, 2017, Youtube video, pub. Jan 23. 2018[5]

02 Presentation: Transkribus in practice - Reports from Users, Transkribus User Conference, Nov. 2-3, 2017, Youtube video[6]

03 Presentation: Transkribus keyword searching and indexing, Transkribus User Conference, Nov. 2-3, 2017, Youtube video[7]

04 Presentation: More reports from users: first part, Transkribus User Conference, Nov. 2-3, 2017, Youtube video[8]

04 Presentation: More reports from users: second part, Transkribus User Conference, Nov. 2-3, 2017, Youtube video[9]

05 Presentation: Panel discussion, Transkribus User Conference, Nov. 2-3, 2017, Youtube video[10]

Transkribus background

- ↑ Alex Hailey (Curator, Modern Archives and Manuscripts), 'Using Transkribus for handwritten text recognition with the India Office Records', British Library, Digital scholarship blog, Jan. 23rd, 2018, accessed 07/02/2018

- ↑ Nora McGregor (Digital Curator, British Library), '8th Century Arabic science meets today's computer science, Or, Announcing a Competition for the Automatic Transcription of Historical Arabic Scientific Manuscripts', British Library Digital Scholarship blog, Feb. 8th, 2018, accessed 07/02/3018

- ↑ Interactive Keywords Spotting Tool, showcased at European Researchers' Night, READ blog entry, Oct. 23, 2017, accessed 06/02/2018

- ↑ Transkribus/VCG-DUTH-Word_Spoting_By_Example, Github, 2018, accessed 06/02/2018

- ↑ Günter Mühlberger, 01 Presentation: Transkribus - the status quo and future plans, Youtube video, pub. Jan 23. 2018, accessed 06/02/2018

- ↑ 02 Presentation: Transkribus in practice - Reports from Users, Transkribus User Conference, Nov. 2-3, 2017, Youtube video, accessed 07/02/2018

- ↑ 03 Presentation: Transkribus keyword searching and indexing, Transkribus User Conference, Nov. 2-3, 2017, Youtube video, accessed 07/02/2018

- ↑ 04 Presentation: More reports from users: first part, Transkribus User Conference, Nov. 2-3, 2017, Youtube video, accessed 07/02/2018

- ↑ 04 Presentation: More reports from users: second part, Transkribus User Conference, Nov. 2-3, 2017, Youtube video, accessed 07/02/2018

- ↑ 05 Presentation: Panel discussion, Transkribus User Conference, Nov. 2-3, 2017, Youtube video, accessed 07/02/2018